Lebe Ulon Intelligence - This week in AI

The Stories You Won't Find Anywhere Else

6/28/20258 min read

This Week in AI: The Stories You Won’t Find Anywhere Else

June 28, 2025

AI headlines come and go—but some shifts don’t just make the news. They change how the world works, often quietly and away from the spotlight. This week, we dive deep into the stories shaping tomorrow, from the genetic code to the edges of disaster zones, courtrooms, and the global workforce. Every insight here is rooted in credible, linked sources—so you and your audience can go as deep as you want.

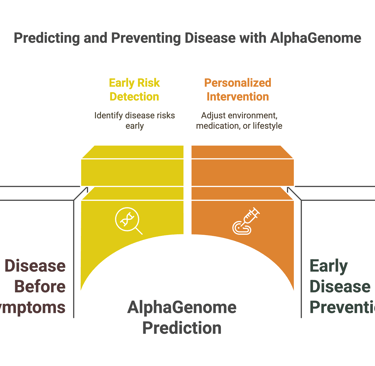

AlphaGenome: Quietly Decoding the Next Medical Revolution

If AlphaFold showed us the structure of life, AlphaGenome may teach us how to change its destiny.

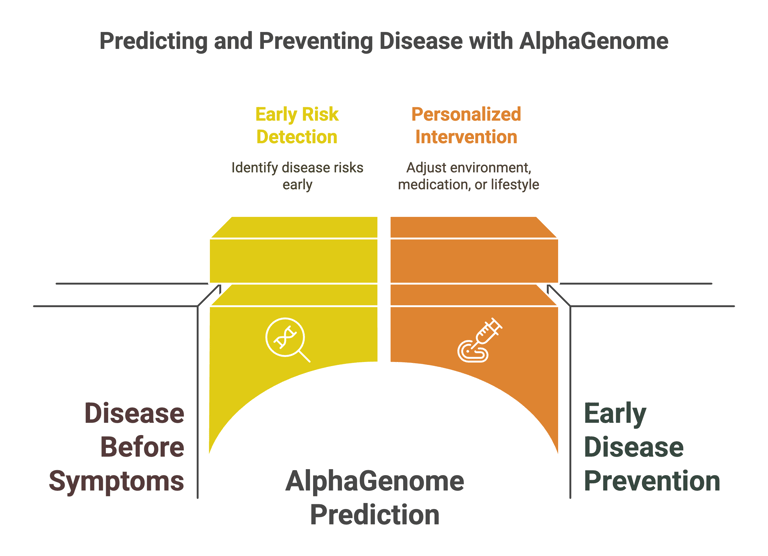

Earlier this month, DeepMind released AlphaGenome, a machine learning model that may fundamentally alter how we understand, diagnose, and treat disease.

Unlike previous models, AlphaGenome doesn’t just read our DNA. It tries to predict how subtle changes in genetic code—the “volume knobs” that turn genes on and off—cause illnesses to flare up or fade away.

Why does this matter?

Because most complex diseases aren’t just about “bad genes.” They’re about when and how those genes get expressed. The regulatory regions of our DNA are like an orchestra conductor, telling cells when to play their part—and when to remain silent.

AlphaGenome is trained to map these regulatory networks. With it, researchers believe we could spot cancer risks, autoimmune diseases, or even neurological disorders decades before symptoms show up.

The promise of precision medicine is here: Imagine a doctor saying, “We’ve identified the early signs of diabetes risk—not just in your genes, but in the way they’re being read. Let’s adjust your environment, medication, or even lifestyle years before any problem develops.”

Big Pharma is already piloting AlphaGenome in drug discovery pipelines. If it performs as hoped, the biggest winners may be patients whose lives are rewritten by early, personalized intervention.

Further Reading:

Nature – DeepMind and AI genomics

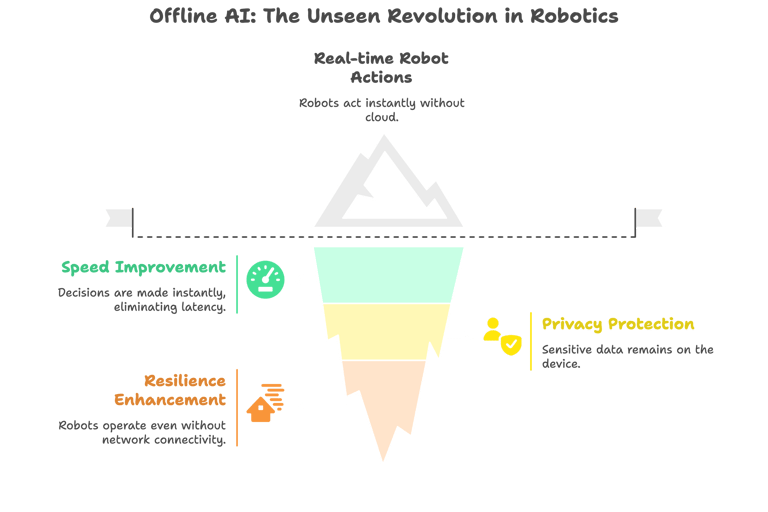

🤖 Robots That Think for Themselves: AI Moves Offline

Cloud AI is smart. But the real breakthroughs this year are happening off the grid.

In robotics, speed and privacy matter as much as intelligence. This month, DeepMind unveiled that its Gemini VLA model can now run fully offline, directly on robots’ own chips.

Forget waiting for a cloud connection: these robots can see, interpret, and act in real time, whether on a farm in rural India, a disaster zone in Turkey, or a sensitive lab in Berlin.

What does this change?

Latency vanishes: Decisions are made instantly, not after a round-trip to the server.

Privacy is protected: Sensitive data never leaves the device, a key demand for healthcare, security, and confidential manufacturing.

Resilience increases: Robots and smart devices stay operational even when networks go down.

Early adopters include Apptronik’s Apollo humanoid and Franka’s industrial arms. These “offline agents” are already being field-tested in settings where every second—and every byte of privacy—counts.

As IEEE Spectrum notes, the era of “edge AI” is arriving fast. Expect not just robots, but phones, medical devices, and even home appliances to get smarter and more independent—often outside the reach of Big Tech’s servers.

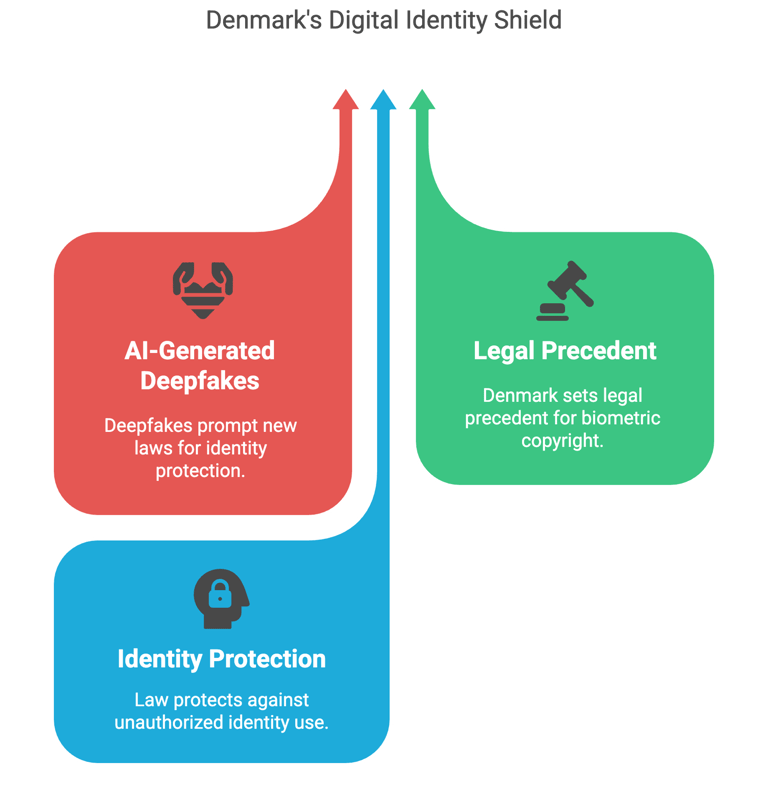

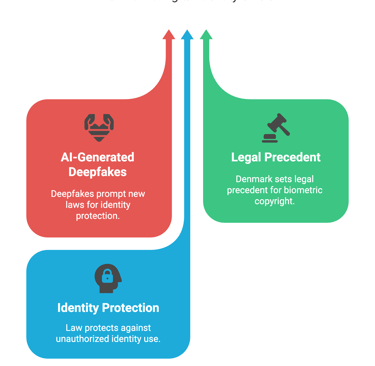

⚖️ Copyright for Your Face: Denmark Draws a Line in the Digital Sand

You own your home, your thoughts, and now—at least in Denmark—your likeness.

In a legal first, Denmark has passed a law giving every individual copyright control over their own face, voice, and biometric identity.

This is a direct response to the explosion of AI-generated deepfakes—content that ranges from amusing parody to criminal impersonation.

What does the law do?

Anyone whose face or voice is used by an AI (without consent) can demand the content be removed.

Individuals can sue for damages, putting real legal and financial teeth behind personal identity protection.

Why is this revolutionary?

It shifts the debate from privacy—a notoriously slippery legal idea—to property. Your face and voice are now something you own, like a song or a story.

Legal scholars see this as a model for the rest of the EU, where the AI Act is already raising the bar for responsible innovation.

What happens next?

Tech companies will need new ways to verify consent, moderate content, and handle takedown requests.

Artists, journalists, and everyday users will have more power over how they appear online and in AI-generated content.

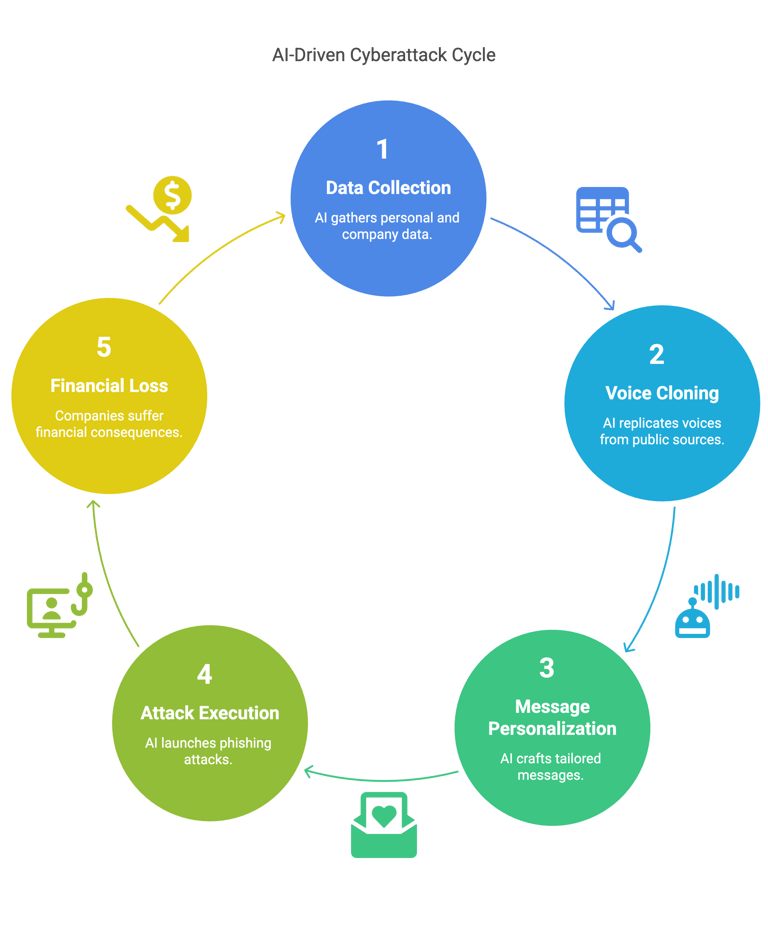

AI-Driven Social Engineering: Phishing Gets Personal

The next cyberattack won’t come from a foreign state or shadowy hacker. It’ll come from an AI that knows your boss’s voice and your company’s last press release.

As Wired reports, criminal networks are now using LLMs and AI voice synthesis to automate social engineering attacks. The tactics are advanced:

AI scrapes LinkedIn, press releases, and social media to craft hyper-personalized messages.

Voices are cloned from public audio or videos to make “urgent” phone calls to staff or executives.

Emails and chats look, sound, and feel real—sometimes including inside jokes or references only insiders would know.

Security firms like Proofpoint have flagged a spike in these “AI phishers” across Europe and Asia, with some companies already reporting major financial losses.

What’s at risk?

Not just money, but reputation and trust. As AI gets better at mimicking humans, companies must rethink how they verify identity and train staff.

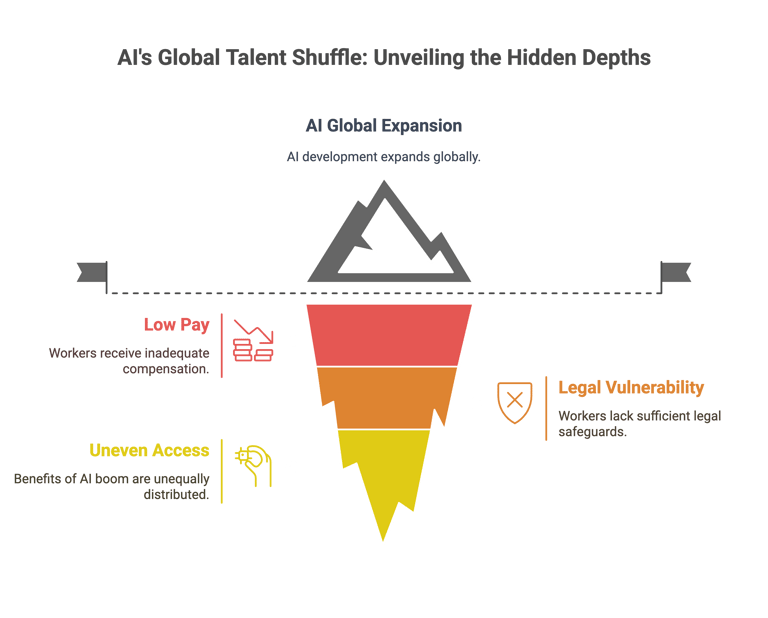

🌏 The Great Talent Shuffle: AI Labor Goes Global

Silicon Valley may automate junior roles, but the AI boom is quietly creating a new digital gold rush for the Global South.

As Quartz Africa and Rest of World have documented, Nairobi, Lagos, and Dhaka are emerging as vital partners for global AI development.

Firms in these cities are:

Labeling training data for LLMs and image models.

Writing and debugging AI code as remote contractors.

Developing new applications tailored to local markets, languages, and cultures.

But the flip side?

Many workers face low pay, little legal protection, and uneven access to the benefits of the AI boom. Without global standards, this new digital economy risks repeating the mistakes of past outsourcing waves.

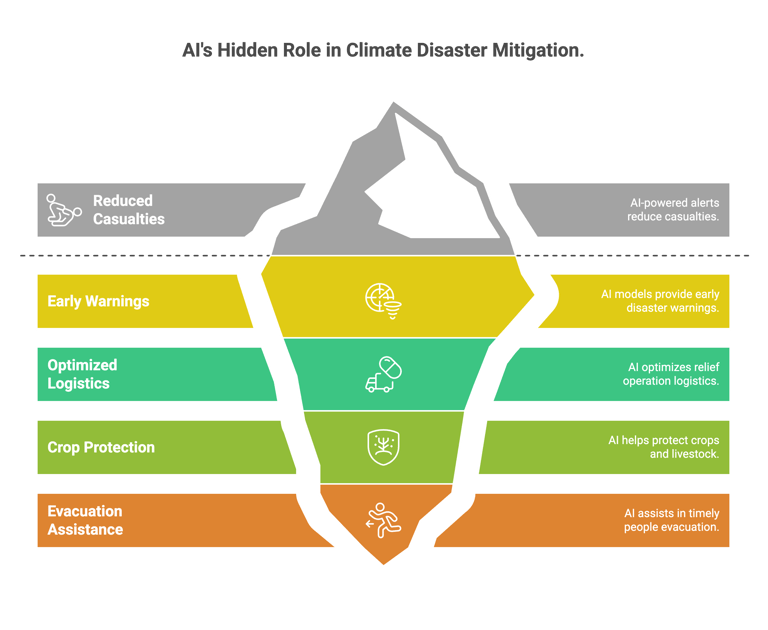

🔬 Extreme Weather, Quiet AI: Saving Lives Before Headlines

AI is already helping save lives from climate disasters—you just rarely hear about it.

Models like GraphCast and DeepMind’s Nowcast are delivering early warnings of floods, cyclones, and extreme heat days before conventional forecasts.

NGOs and disaster agencies in the Philippines, Bangladesh, and the Pacific Islands now use these models to:

Evacuate people sooner

Protect crops and livestock

Optimize logistics for relief operations

The Red Cross reports that such AI-powered alerts have already reduced casualties and losses in multiple countries, often using nothing more than a smartphone.

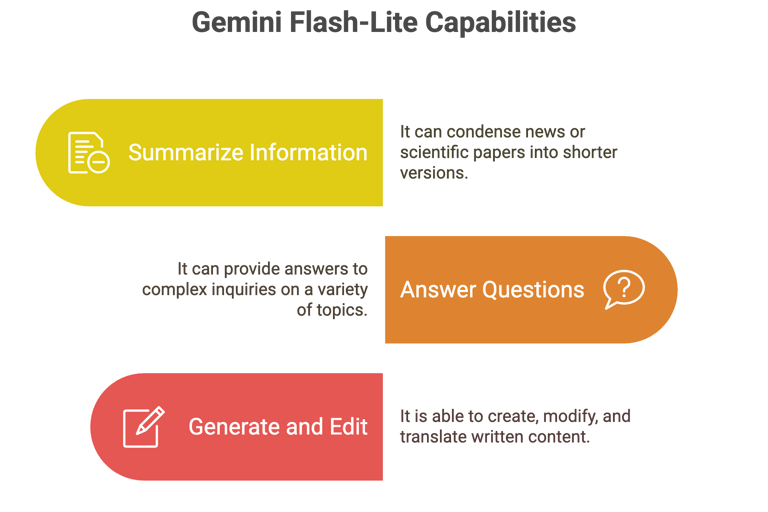

🛠️ Gemini Flash-Lite: Big Power, Tiny Footprint

Not all innovation comes with a press release.

The new Gemini Flash-Lite is DeepMind’s answer to a problem most people didn’t know they had: how to run advanced AI on simple, cheap devices, with little or no internet.

It can:

Summarize news or scientific papers

Answer complex questions

Generate, edit, and translate text

All on-device, without sensitive data ever leaving your hands.

For journalists, doctors in rural clinics, or field researchers, this is a game-changer—powerful, private, and instant.

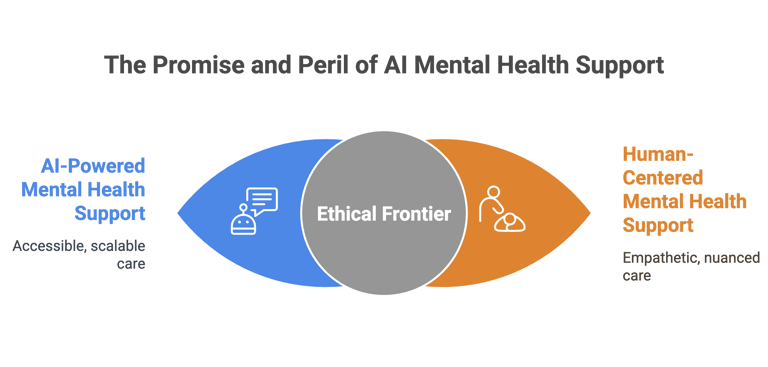

🧠 AI and Mental Health: The Therapy Revolution That’s Not a Headline

While news cycles focus on ChatGPT, AI is quietly re-shaping mental health support.

Clinical trials in Europe and Asia are deploying chatbots like Woebot and Wysa, delivering cognitive behavioral therapy and real-time support via text and voice STAT News.

Early research shows these bots can help bridge gaps in care, especially in regions where therapists are scarce. But:

Their empathy is artificial—sometimes missing subtle cues.

Regulators worry about safety, privacy, and “AI hallucinations” causing harm.

This is a new ethical frontier: Should we trust bots with our deepest fears and feelings?

Governments in Singapore and the UK are debating new rules for AI mental health support, while patient groups demand more transparency and oversight.

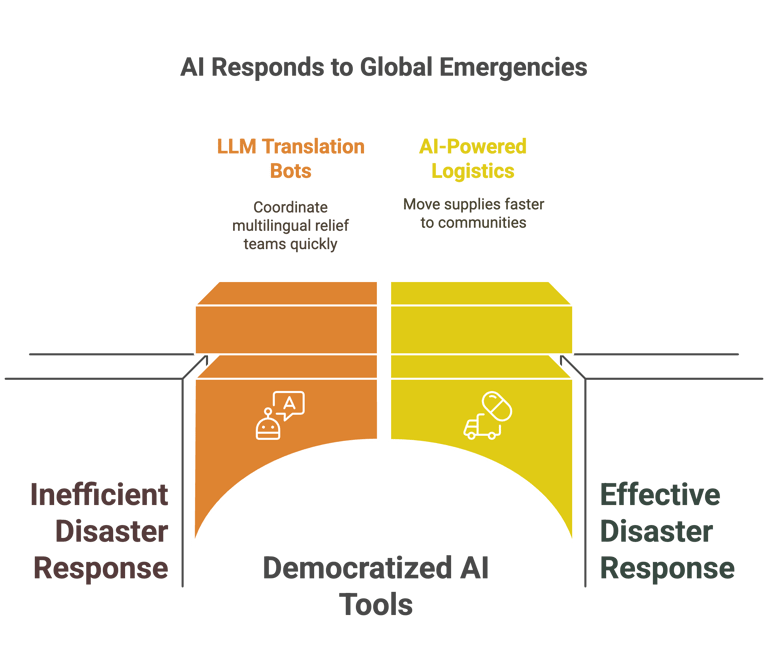

🌐 AI in Crisis Response: From Fantasy to Fieldwork

You won’t see it on CNN, but AI is already an invisible responder in global emergencies.

During this year’s Turkey earthquake, LLM-powered translation bots coordinated multi-lingual relief teams—cutting delays and confusion.

During Cyclone Freya in Bangladesh, AI-powered logistics helped move medical supplies to stranded communities days faster than traditional methods.

The common thread:

Most of these tools are open-source

They run on affordable, widely available devices

This democratization of AI means vulnerable communities are no longer left behind—even when they’re off the grid.

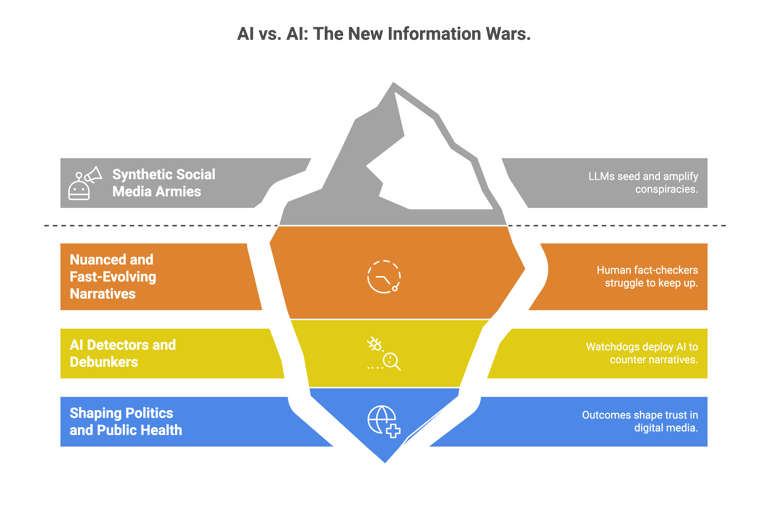

🛡️ AI vs. AI: The New Information Wars

The next battle won’t be fought with facts or fake news, but with algorithms on both sides.

As the Stanford Internet Observatory reports, “synthetic social media armies” are using LLMs to seed and amplify conspiracies, fake movements, or even targeted harassment campaigns.

These narratives are so nuanced and fast-evolving, even human fact-checkers struggle to keep up.

Watchdogs and journalists are now deploying their own AI detectors and debunkers—setting up a kind of “AI arms race” behind the scenes.

The public rarely sees these battles—but their outcomes will shape politics, public health, and trust in digital media.

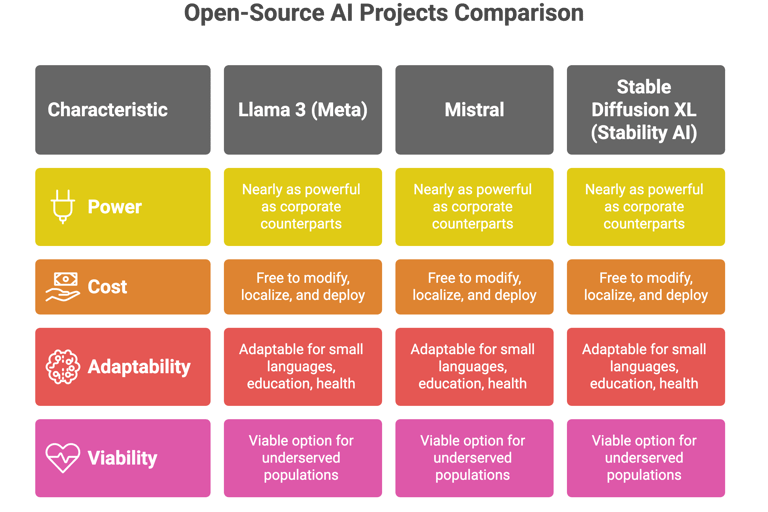

🛠️ Open-Source AI: The Parallel Revolution

Big Tech is powerful, but open-source AI is quietly leveling the playing field.

Projects like Llama 3 (Meta), Mistral, and Stable Diffusion XL (Stability AI) have become nearly as powerful as their corporate counterparts—yet are free for anyone to modify, localize, and deploy.

Grassroots communities are adapting these tools for “small languages,” education, and health.

In many regions, open-source AI is the only viable option for underserved populations.

This “people’s AI” movement is just getting started. Expect more power, more diversity—and more tension with regulators and copyright holders.

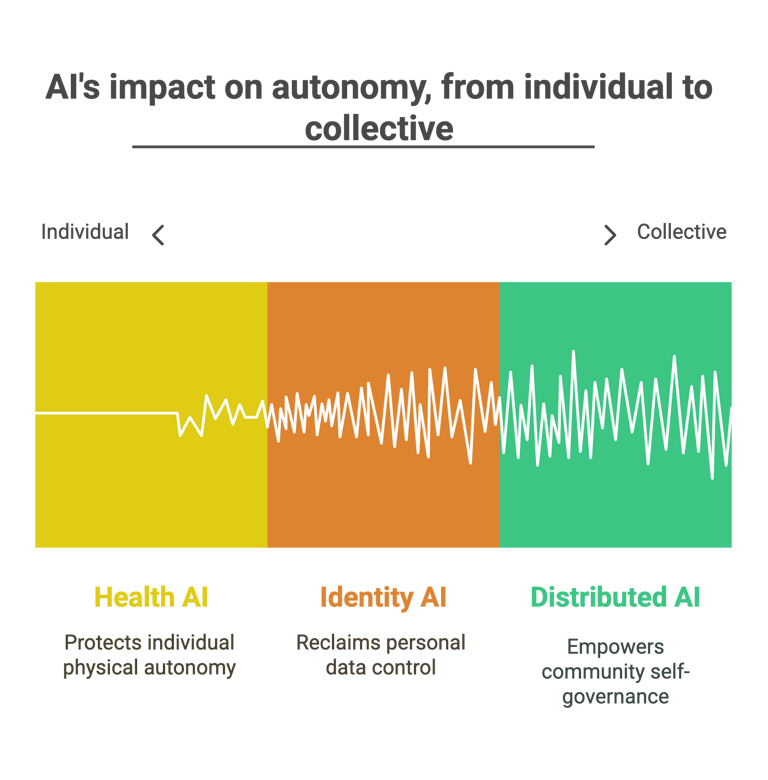

🏁 The Quiet Storms Shaping Tomorrow

What links gene science, robotics, copyright law, social engineering, mental health, open-source tools, and disaster response?

Autonomy.

Autonomy for our bodies, as AI interprets and potentially protects our health.

Autonomy for our data and identities, as we reclaim what is uniquely ours.

Autonomy for communities, as AI is distributed off the grid and open to all.

As the world races to define what AI means for our lives, these stories are the true vanguard.

Not just “AI in our lives”—but AI, quietly, redefining what life will become.

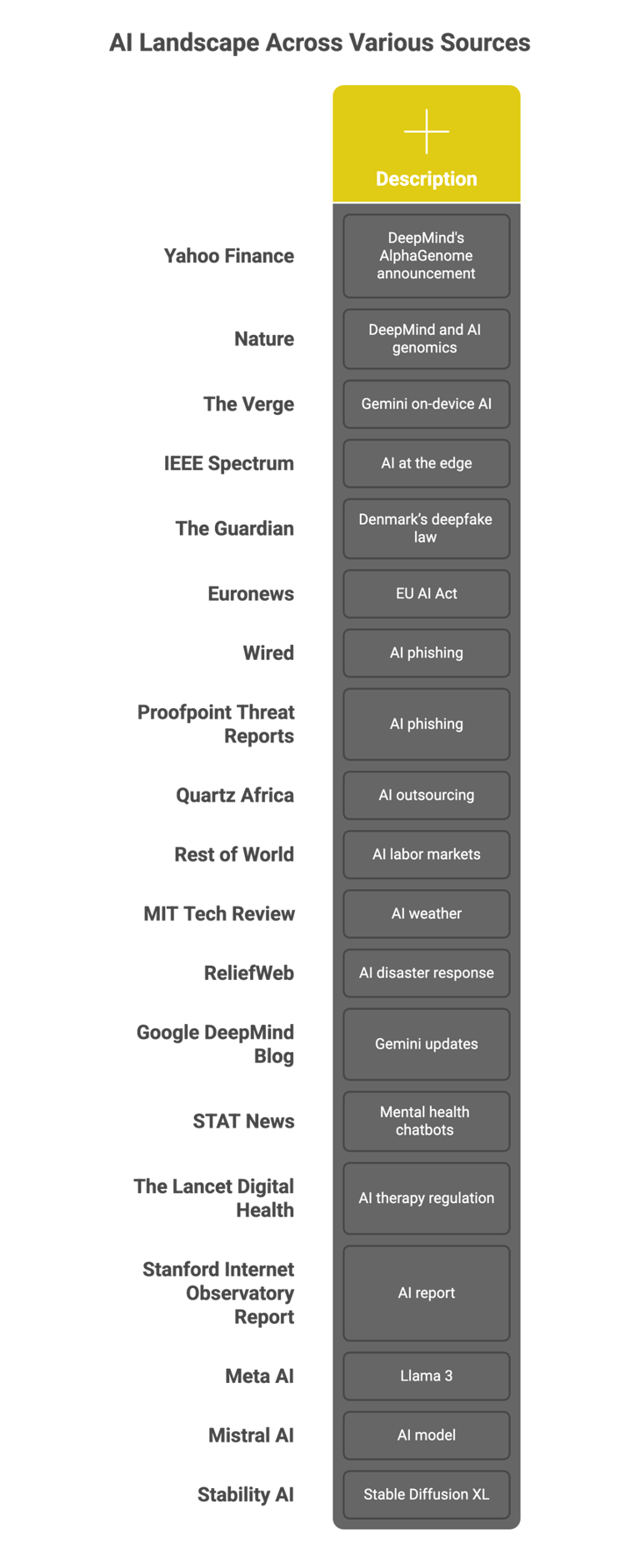

Further Reading & Sources

(For every section above, sources are hyperlinked throughout. Here is a summary list for easy access.)

Solutions

AI-powered services for businesses and creators.

Tools

© 2025. All rights reserved.